Free Viewpoint RGB-D Video Dataset

Introduction

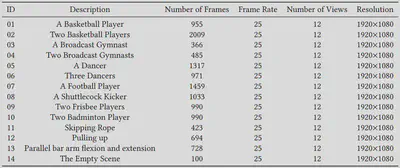

Our free viewpoint RGB-D video dataset is a dynamic, synchronous and close to practical RGB-D HD free viewpoint synthesis dataset. Our dataset consists of 14 groups of video sequences, including 13 groups of human motion video sequences such as dancing, playing basketball and playing football, and 1 group of empty scene video sequences, as shown below.

Dataset Collecting and Post Processing

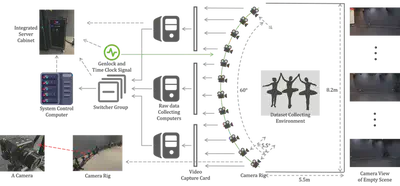

We designed the collecting environment and system as shown below.

The collecting environment is a uniformly lighted clean indoor stage which is 8.2 meters in length and 5.5 meters in width. The collecting system includes 12 Panasonic DC-BGH1 cameras, 3 raw data collecting computer, a system control computer, the switcher group, and the synchronization signal processor. The 12 cameras are placed regularly on an arc in front of the scene, about 46 cm and 5.5° apart.

An alumina calibration chess board is used to get more exact intrinsic and extrinsic parameters of the 12 cameras. Then we get depth images for all frames of video sequences with COLMAP. For each camera view, we calculate a common background depth map which is the average of nonempty part of depth images that corresponds to RGB frames taken by this camera. After that, we improved quality of depth images by background replacement and foreground filtering.

Download

2024-01-29 Update: Better video compression method. For users who have already downloaded this dataset, we recommend that you re-download the current version.

-

Our dataset is available for free and academic research only, no commercial use. Please cite our paper as

Guo S, Zhou K, Hu J, et al. A new free viewpoint video dataset and DIBR benchmark[C]//Proceedings of the 13th ACM Multimedia Systems Conference. 2022: 265-271.

in any published work if you use those video sequences. -

You can access our File Share or Dropbox to download video sequences of the dataset. Contact Admin Donghui Feng for any questions.

-

Camera parameters are provided in this repository.

Copyright (c) 2022, Shanghai Jiao Tong University. All rights reserved.